Shannon's Entropy for Images

25 Jun 2022Image Entropy

To calculate the Shannon’s entropy for data \(x\) we use,

\[\begin{align} H = -\sum_i {\bf{p}}(x_i)\log_2 {\bf{p}}(x_i), \end{align}\]for probabilities \({\bf{p}}(x_i)\) and entropy calculated in bits.

For an image we need to determine the pixel depth \(p\), often \(p=8\) for 8 bits or 256 levels per pixel or \(p=\infty\) for real numbers, and then bin the pixel values to determine their probability.

Maximum Entropy value for Images

To find the maximum possible entropy for a specific image size \(m\times n\) and pixel depth \(p\) we observe that entropy is maximized when all of the probabilities are equal. So for a \(16\times 16\) image with \(p=8\) we can define 4 pixels per pixel value (\(n\times m / 2^p\)).

For small image sizes there may be insufficient pixels to support all pixel values. For example a \(7\times 7\) image can only support a pixel depth of \(\log_2 7^2\) or 5.614.

Our maximum entropy, for an \(n\times m\) image with pixel depth \(p\) is,

\[\begin{align} H_{\mathrm{max}}^p(m,n) &= -\frac{mn}{2^{2\hat p}}\log_2 \left(\frac{mn}{2^{2\hat p}}\right)\times 2^{\hat p}, \\ \label{eqn:maxent} &= -\frac{1}{2^{\hat p}}nm\left(log_2\left( nm\right) - 2\hat p\right), \end{align}\]where \(\hat p= \min(p, \log_2( nm))\).

We note that for the common case for arrays of float values, where the pixel depth is infinite, the ``effective’’ pixel depth is \(\log_2( nm))\) and the maximum entropy Equation above can be simplified to,

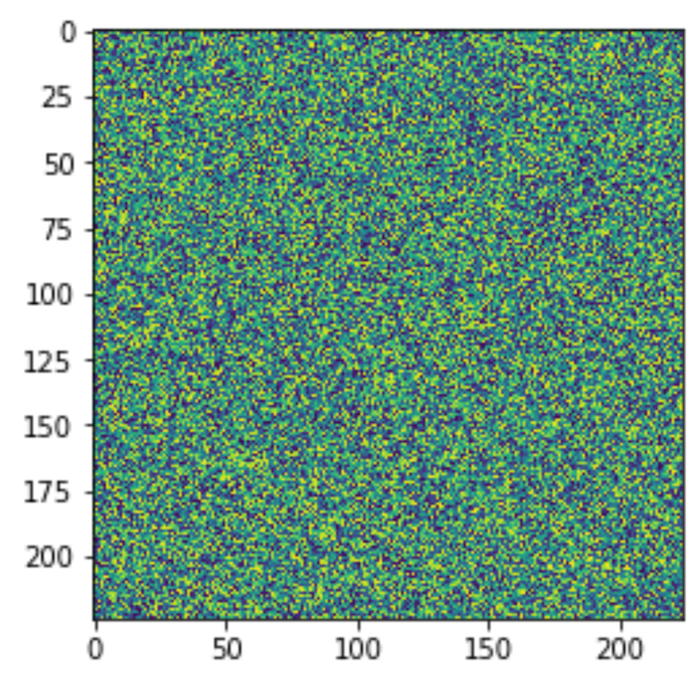

\[\begin{align} H_{\mathrm{max}}^{\infty}(m,n) &= \log_2 \left(mn\right). \end{align}\]If you want to know what a maximum entropy image looks like here is such a thing,

Thanks!